How Intel makes sure the FDIV bug never happens again

The people and breakthroughs behind Intel’s quiet revolution in formal verification

FDIV

This historic issue has been extensively analyzed and reported on. Here’s a brief summary:

In 1994, a math professor discovered anomalies in calculations with Intel Pentium CPUs.

Intel attempted to brush it under the rug, claiming it isn’t a big deal.

However, the whole situation blew up, resulting in a recall that cost Intel ~$500 million.

The problem

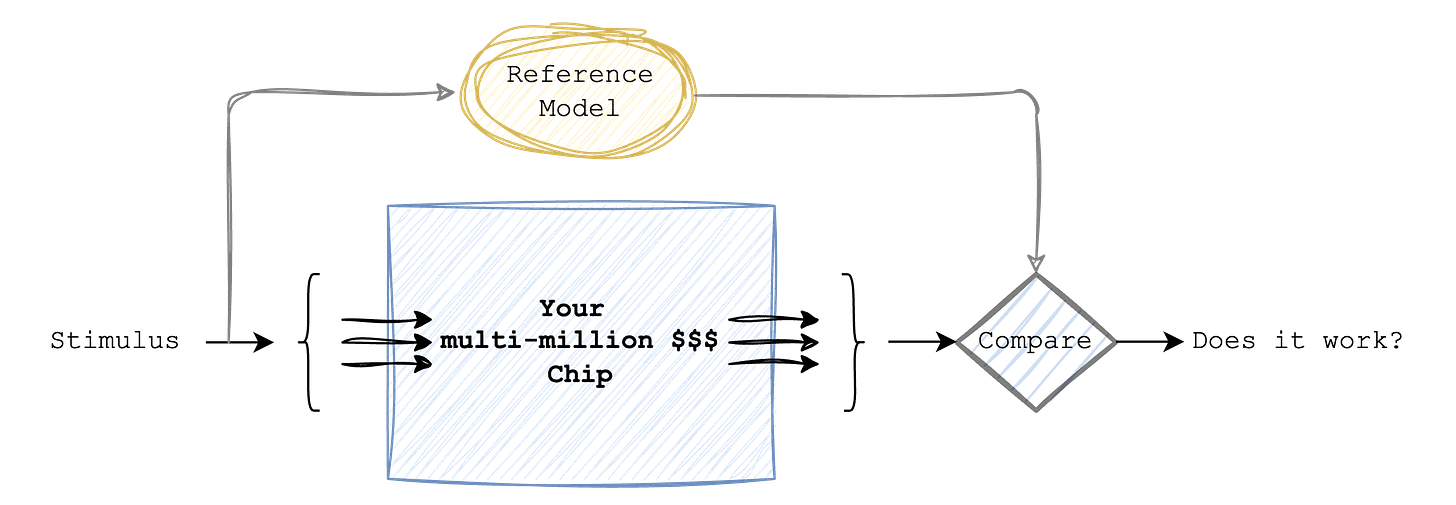

To understand how a problem like this goes undetected before manufacturing, we must understand how chips are tested and verified before fabrication.

Pre-silicon verification (i.e., testing of chip designs before they are sent for fabrication) is primarily done through a method called simulation; this was true in the 90s and even today. This method involves writing tests that stimulate the design with all possible combinations of inputs and then observing if it works as expected.

Companies such as Apple, Intel, AMD, and Nvidia, spend considerable effort on simulating chip designs before tape-out. The memory, compute, and licenses for special tools in themselves cost several million dollars, and a fleet of machines regress through massive test suites 24x7x365 in the hopes of uncovering any design flaws.

But, even with such a rigorous process, bugs such as the FDIV have shown us that this method of testing is insufficient.

The issue is that simulation is inherently an incomplete process. It's analogous to using brute force to solve an issue.

This strategy works well for many designs, but for others, such as a Floating Point Unit (FPU), you will never cover all input combinations in a single lifetime.

To be fair, there is a reason why simulation is the primary method used in the industry for chip verification.

+ There is an ecosystem of mature industry tools and methodologies that have been used to successfully tape-out chips over the past decades.

+ During pre-silicon verification, chip designs are often decomposed into smaller modules, for which simulation generally works well.The solution: Verification using Formal methods

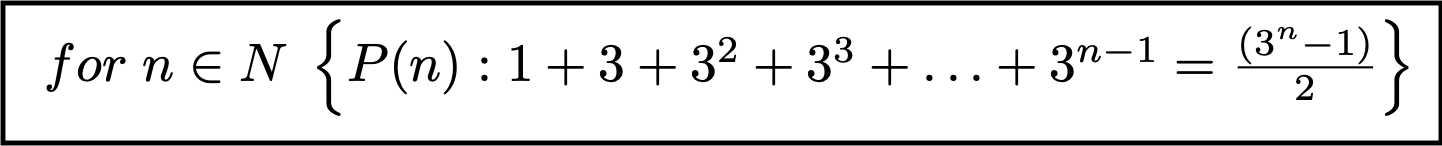

Consider this equation,

There are two ways to verify if it is correct.

Brute force: If you take a simulation-like approach, you might try 10,000 random values for n. If it works, you will then have to optimistically conclude that the equation is right. But, if we’re being honest, you haven’t exhaustively proven that the equation is indeed correct.

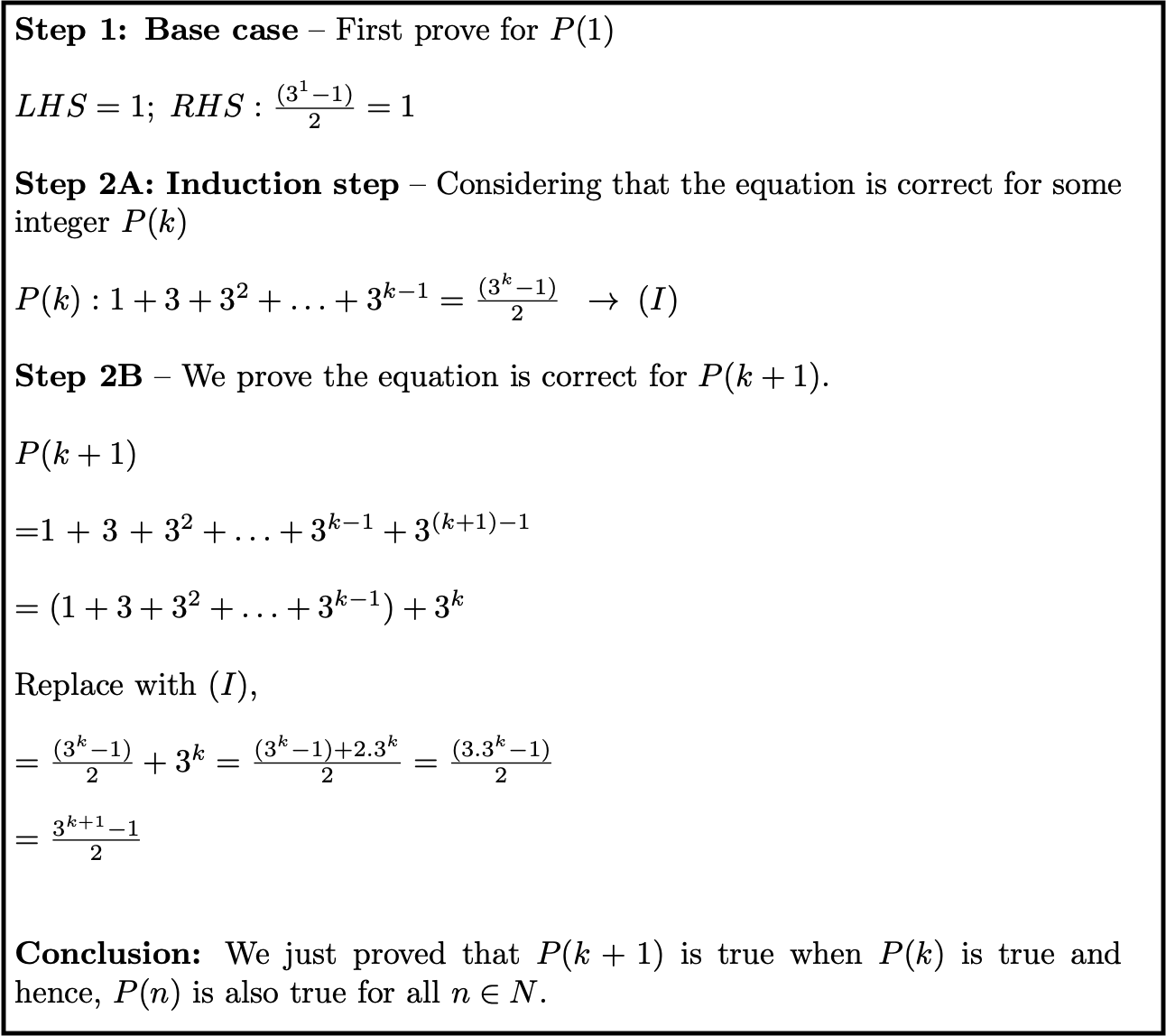

Formal proof: You might recall from high school math that mathematical induction is the right way to exhaustively prove this equation.

The main takeaway from this section is that,

Simulation is not the only method to check if a circuit is doing what it is supposed to do. There is another approach called Formal Verification, which is capable of exhaustively proving that a design works.

The issue with Formal Verification

However, Formal isn’t without its own issues.

Consider mathematical induction from the previous section — we conveniently used it to prove the equation, but how does it actually work? How can you simply assume something to be true for n=k and then use that assumption to construct a proof for n=k+1?

Another example, consider Pythagoras’ or Euclid’s theorem. These problems use completely different mathematics and reasoning techniques to arrive at the proof.

The core issue with Formal methods is that understanding the mathematical basis for Formal proofs, which in case of hardware verification are LTL, CTL, Model Checking and Theorem Proving, is quite challenging, and coming up with fresh proof approaches is even more difficult.

Formal Verification has only recently (in the last ten years) broken away from being purely in the realm of Math and Computer Science PhDs and entered the toolkit of the average engineer.

We finally have a collection of mature tools, methodologies and proof techniques which can be learnt and applied within the constraints of aggressive tape-out schedules.

In the following section, we will look at how Intel contributed to this effort.

Formal Verification at Intel

Evolution

After the fallout from the FDIV problem in 1995, Intel knew it needed to do something different. At the time, there were no established alternatives to simulation. But, Intel correctly identified that the answer lies in the field of, what I will loosely call, Automated Reasoning.

The idea behind automated reasoning is to have a computer program prove mathematical theorems or, given a formal model of a system (such as a state transition diagram), have a program tell you if a certain equations are true in that system. Automated theorem proving first saw success in the 1950s. But further research in the field was largely motivated by the desire to improve solvers/proof assistants as well as software/systems reliability (See Edgar Dijkstra’s essay On Reliabilty of Programs). It was only in the 1980s when researchers began to explore the effectiveness of this approach in hardware verification.

Fast forward back to 1995, there were really three strategies worth exploring for hardware verification — Model Checking, SAT solvers and theorem provers. Ed Clarke, the co-inventor of Model Checking, said this in his 2007 Turing Award Lecture.

When Intel made the announcement that there was a real problem with the Pentium 1 chip, a former CMU student who had taken one of my classes, Manpreet Khaira (then a Principal at Intel), called me up and said “send me one of your students”.

I sent him Xudong Zhao, who was my best student at the time. Xudong spent 3-4 months at Intel and was able to show that his PhD thesis topic, Word Level Model Checking, could indeed have found the Pentium error and moreover he was able to show that the fix Intel had come up with did indeed fix the problem.

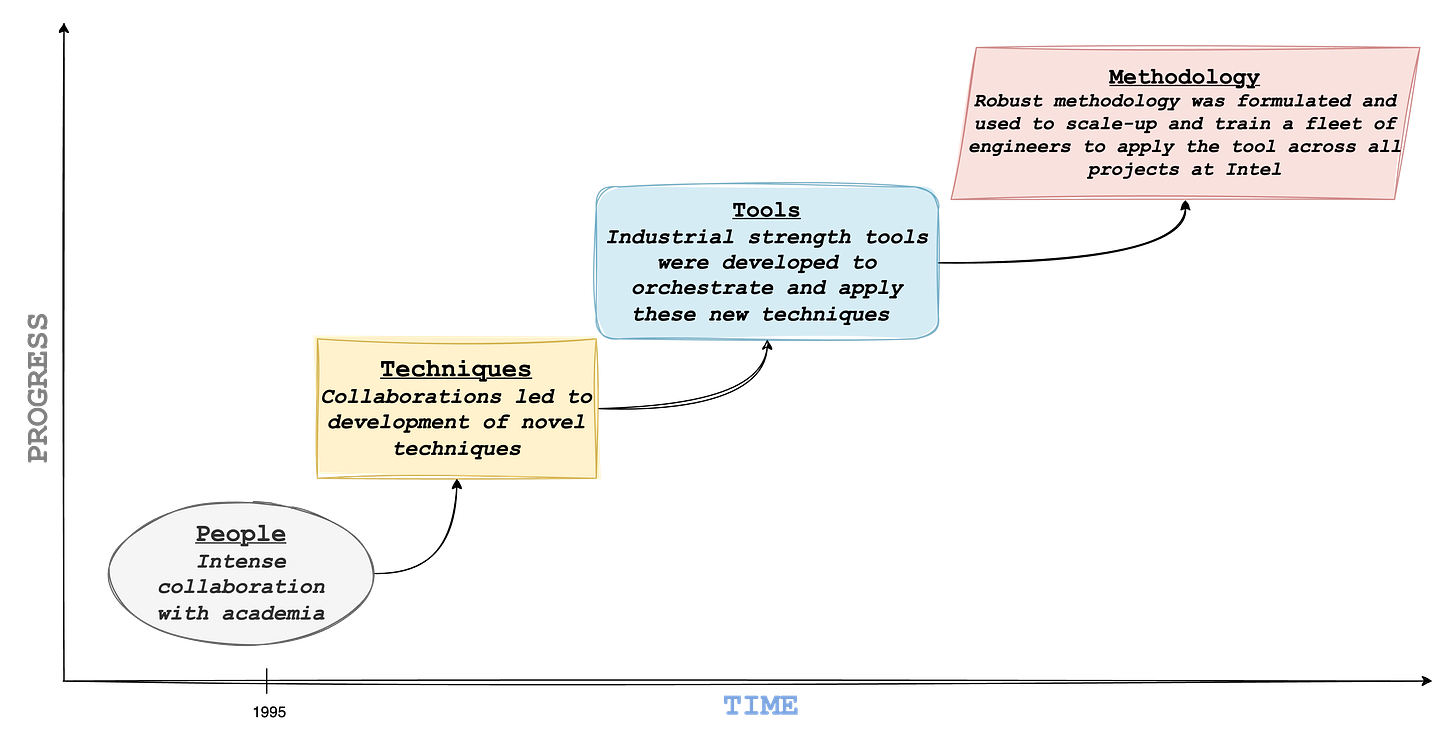

Ed Clarke, 2007 Turing Award LectureSubsequently, Intel committed time and money in developing Formal Verification. This chart summarizes Intel's evolution in this field.

People

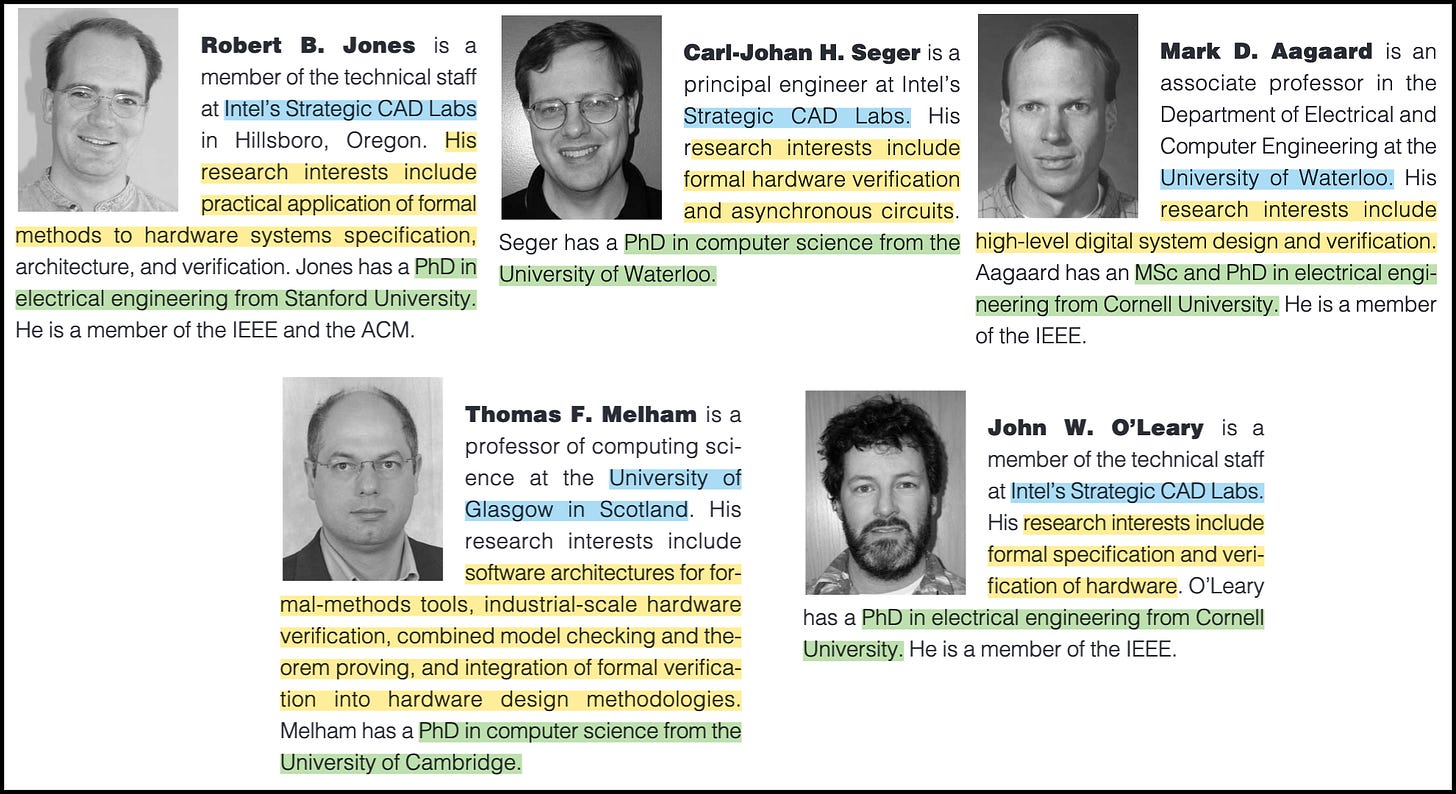

When I scanned through the research published between 1995 and 2010, I noticed that practically every researcher from around the world who worked in the field of Model Checking or Automated Theorem Proving did a stint at Intel. By bringing these experts into the same cauldron new Formal techniques and methods were published at a brisk speed. (Calling it a cauldron seems appropriate given the heat Intel was experiencing at the time).

Techniques

Working with Intel gave these researchers the opportunity to apply their ideas to a real-world problem and, more importantly, iterate on these ideas until they matured and were robust enough for an industry setting.

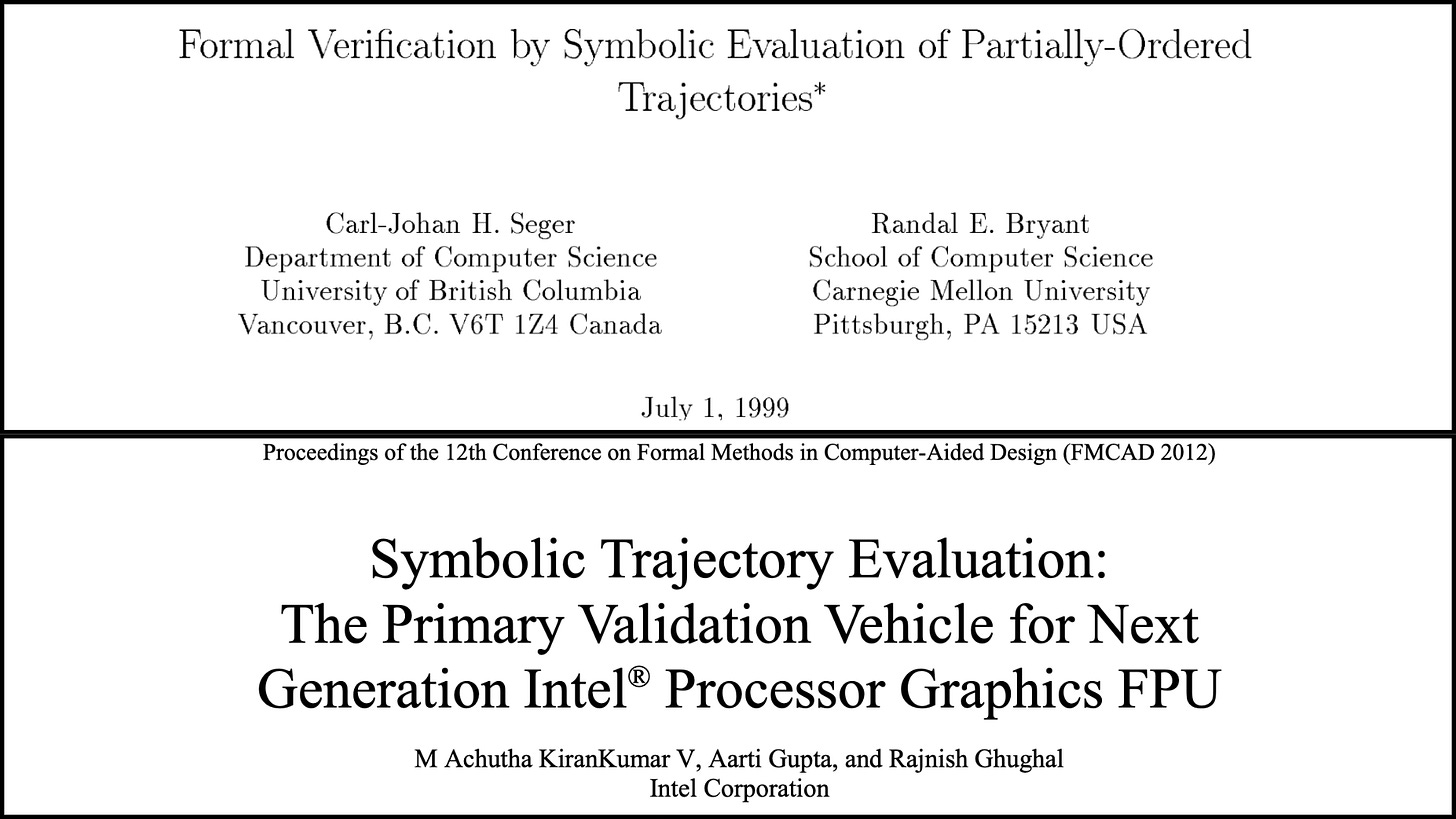

For example, vanilla Model Checking has been known to have capacity issues. It only allows the user to reason with small designs, nowhere sufficient for something like the Intel Pentium processor. Several creative abstractions had to be constructed to boost the capacity of Model Checkers. The best idea to emerge from these academic collaborations was Symbolic Trajectory Evaluation (STE), which was the brainchild of Carl H. Seger.

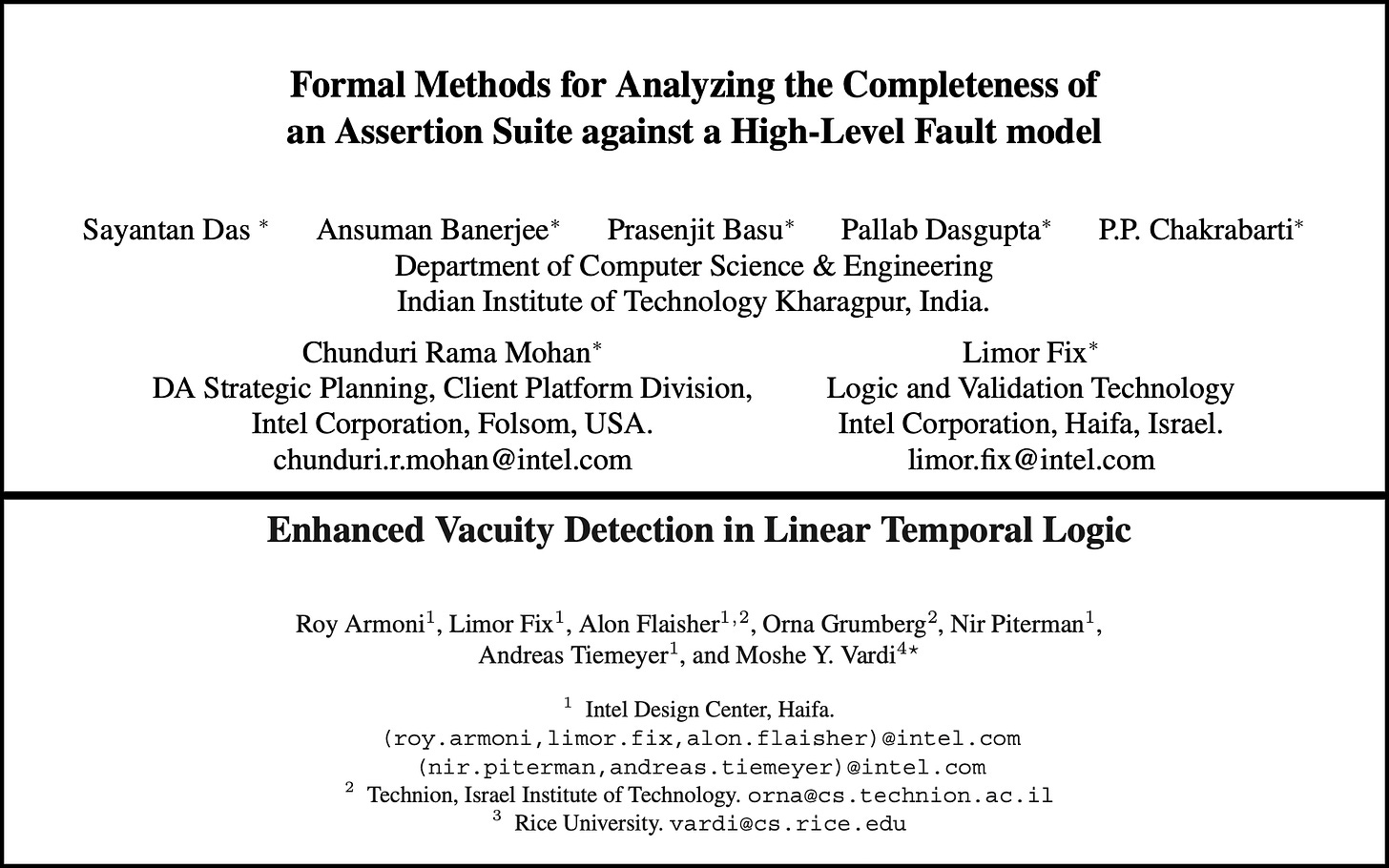

But, even a venerable technique such as STE is only as good as the operator who uses it. Limox Fix and few others published seminal work on answering the question “Are we done, yet?”, that is, determining whether the Formal analysis of a design is indeed complete and there are no open holes.

Even here, there was a significant degree of engagement with academia, as shown in the papers below.

Tools

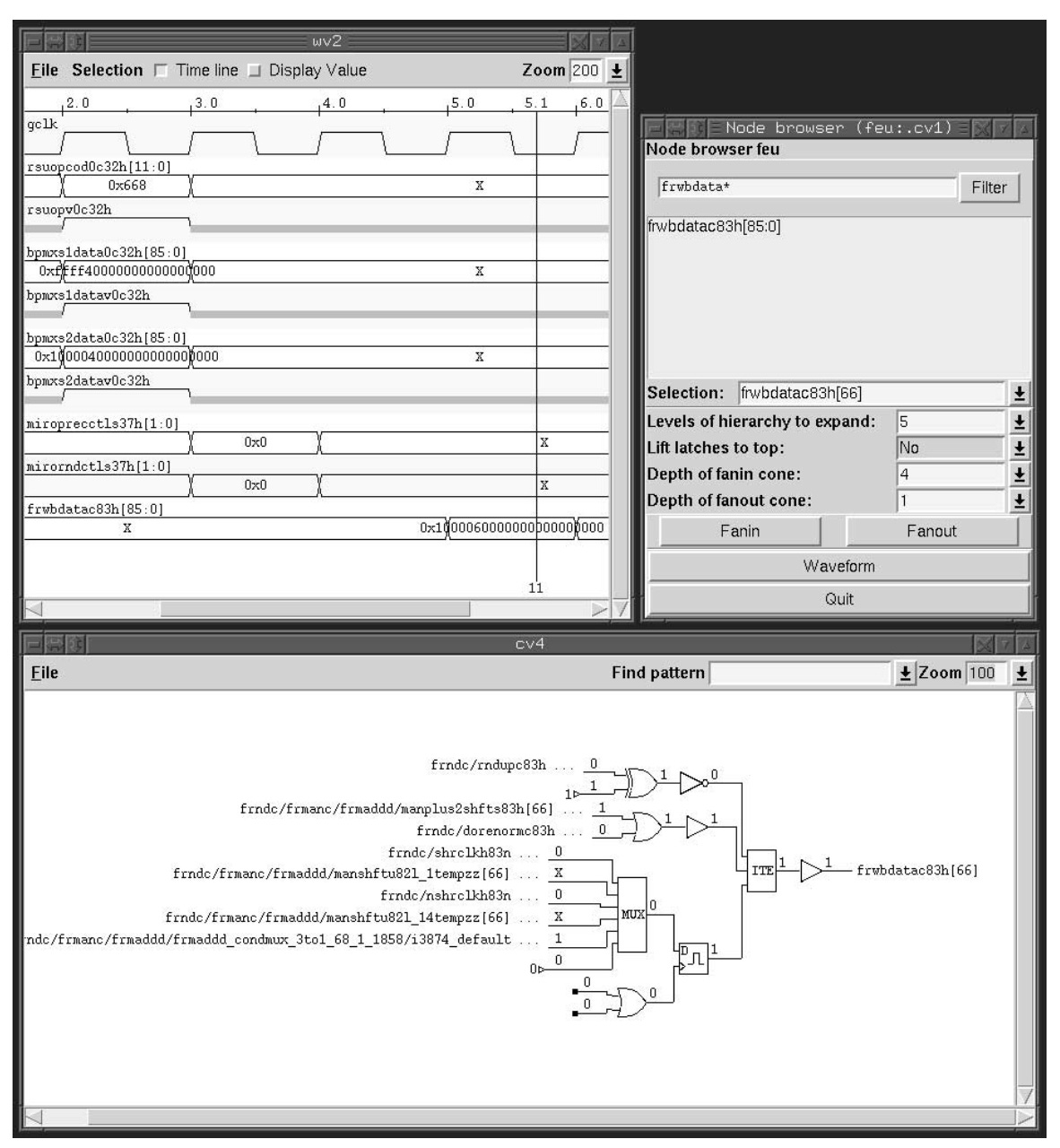

In order to Formally verify a chip design, which is typically represented as RTL, it has to first be synthesized into a suitable form, such as a Binary Decision Diagram (BDD). In 1993, while at the University of British Columbia, Carl Seger developed a tool called VOSS. After joining Intel in 1995, he collaborated with others like Tom Melham to developed its successor called Forte. It was designed specifically for industrial-scale circuits, with a unified interface to a BDD, a Model Checker, a SAT solver and a Theorem Prover [3, 6]. Making it seemless to verify a circuit by applying various techniques, such as STE.

Along with a tool like Forte, you require Formal semantics to write properties and ask questions about the design, such as, “if event-A occurs, then does event-B occur 3 clock cycles later?” For this they created a Formal Specification language called ForSpec.

ASIC/SoC engineers will be delighted to learn that Intel donated ForSpec to Accellera and major parts of it were adopted during the creation of the SystemVerilog Assertions language (SVA). SVA is the current industry standard using which Formal Verification is performed.

Methodology

In order to perform effective Formal Verification and produce high quality results, apart from tools and techniques, the Formal team at Intel also developed a robust methodology, spelling out how these tools should be applied. This was critical in democratizing Formal Verification within Intel and ensuring its adoption across projects in the company.

Here’s a snippet from “Making Formal Property Verification Mainstream at Intel” by M Achutha KiranKumar, et. al.

A 2-week dedicated FV training was conducted for all FV champions and interested designers. The training catered to meet the expectations of varied expertise level users. The training included topics such as FV basics, FV applications for specific verification problems, and working demos. The identified FV champions were given additional trainings on tackling convergence issues and on more complex areas. The response seen in trainings was very encouraging, with almost 85 engineers becoming formally literate. A round-the-clock formal assistance network from various resources was available after the training.

Conclusion

In some ways, the FDIV bug served as a blessing in disguise for the semiconductor industry. Intel helped bring an esoteric field from academic research into mainstream chip development. Today, Formal Verification has grow into a thriving micro-industry with the major three CAD companies (Cadence, Synopsys and Siemens) selling advanced tools akin to Forte and providing training as well. Now every chip company considers Formal Verification a mandatory part of their chip development process.

As a cherry on top, more recently, engineers from Intel’s FVCTO (Formal Verification Central Tech Office) which houses some of the best Formal Verification engineers, published the definitive book on Applied Formal Verification upon which engineers like me have come to rely - Formal Verification: An Essential Toolkit for Modern VLSI Design, Erik Seligman et, al.

If you want to get started with Formal Verification, I recommend these three in-depth tutorials I’ve written on systemverilog.io.

References

Many of the key references are included as images in earlier sections. This reference list offers a broader selection of interesting papers, along with brief commentary on a few. It’s also a small gesture of appreciation for the support from my patrons.